The Challenge

AI was exploding, but there was no single hub to compare, test, and use the best tools in one place.

"I waste half my day switching between ChatGPT, Claude, and others."

— AI Knowledge Worker

Initial Constraints

- Limited Budget — Needed to demonstrate MVP quickly with minimal resources

- Investor Validation — Prove scalable SaaS opportunity and product-market fit

Discovery & Research

Understanding the AI aggregation landscape

Competitive Analysis

Evaluated 30+ AI tools across features, pricing, and UX patterns

20-page comprehensive reportUser Interviews

12+ interviews with AI researchers and knowledge workers

Identified 3 core persona typesCompetitive Analysis Highlights

| Tool | Cost | Features | UX |

|---|---|---|---|

| ChatGPT | $$$ | ||

| Claude | $$ | ||

| Midjourney | $$ | ||

| +27 more tools analyzed | — | — | — |

User Interview Highlights

"I spend more time managing subscriptions than actually using the AI tools. It's exhausting."

"There's no easy way to compare outputs from different models. I have to copy-paste everything manually."

"I need something that works across my entire team, but every tool has different pricing tiers and limitations."

Synthesis & Key Insights

Affinity Mapping Session

Identified patterns from 12+ interviews 3 core persona types

Aggregation is the entry point

Users need a single dashboard to test and compare multiple AI tools before committing to subscriptions.

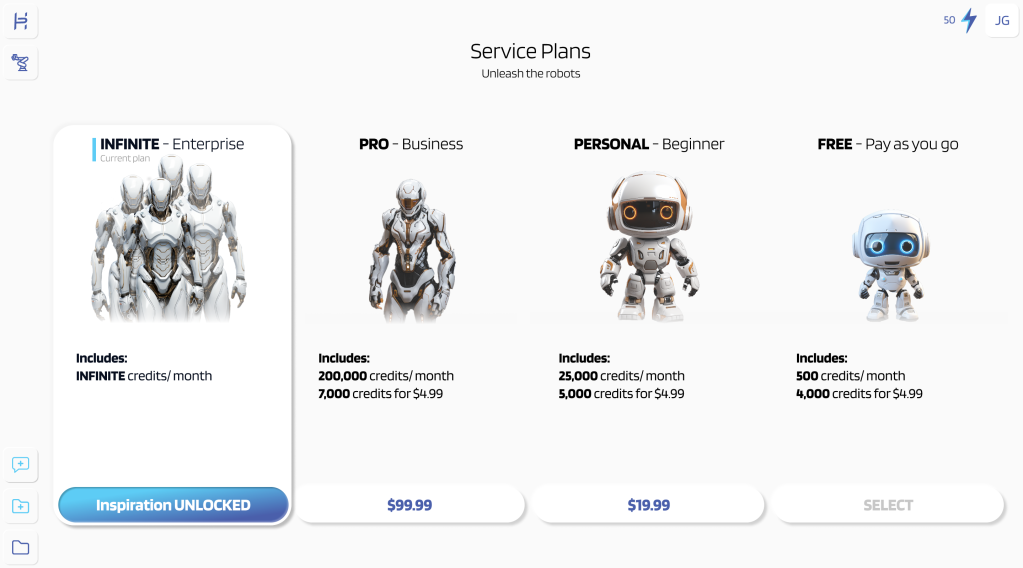

Free tier is critical for adoption

Lower barrier to entry with free baseline access increases willingness to explore premium features.

White-label opportunity for enterprises

Enterprises want branded AI solutions for their teams, creating a B2B revenue stream beyond subscriptions.

Define & Strategize

Translating insights into actionable strategy

User Personas

The Knowledge Worker

Product Managers, Researchers, Analysts

"I need to quickly test multiple AI models to find the best one for my use case."

Pain Points:

- Cost fatigue from multiple subscriptions

- Time wasted switching between tools

- Difficulty comparing outputs side-by-side

Needs:

Unified dashboard, comparison tools, transparent pricing

The Creative Professional

Designers, Writers, Content Creators

"I want to experiment with different AI tools without committing to expensive plans."

Pain Points:

- Budget constraints limiting experimentation

- No unified search across AI tools

- Overwhelming number of options

Needs:

Free tier access, discovery features, creative workflows

The Enterprise Decision Maker

CTOs, Team Leads, Department Heads

"My team needs a centralized AI solution with consistent access and billing."

Pain Points:

- Team coordination across scattered tools

- Lack of white-label solutions

- Complex vendor management

Needs:

White-label capabilities, team management, enterprise billing

Design Principles

Simplify Discovery

Make it effortless for users to find and test the right AI tool for their needs

Reduce Friction

Eliminate barriers between user intent and AI-powered outcomes

Enable Comparison

Provide clear, side-by-side comparisons of AI tool outputs and features

Scale Intelligently

Build a foundation that supports both individual users and enterprise teams

How might we give users one place to discover and use AI without friction?

- ...reduce the cost barrier for users who want to experiment with multiple AI tools?

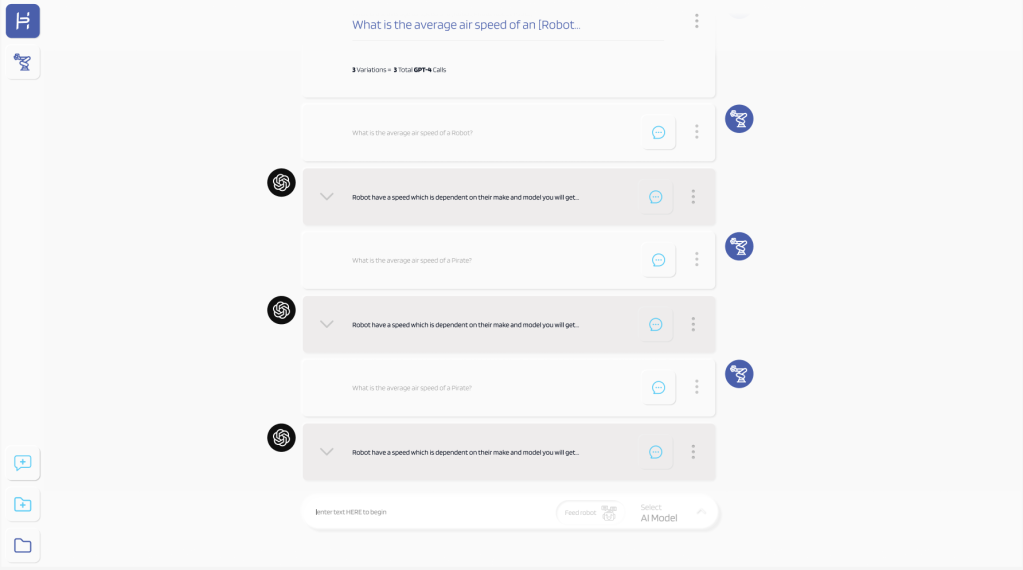

- ...enable side-by-side comparison of AI outputs without manual copy-pasting?

- ...create a scalable solution that serves both individuals and enterprise teams?

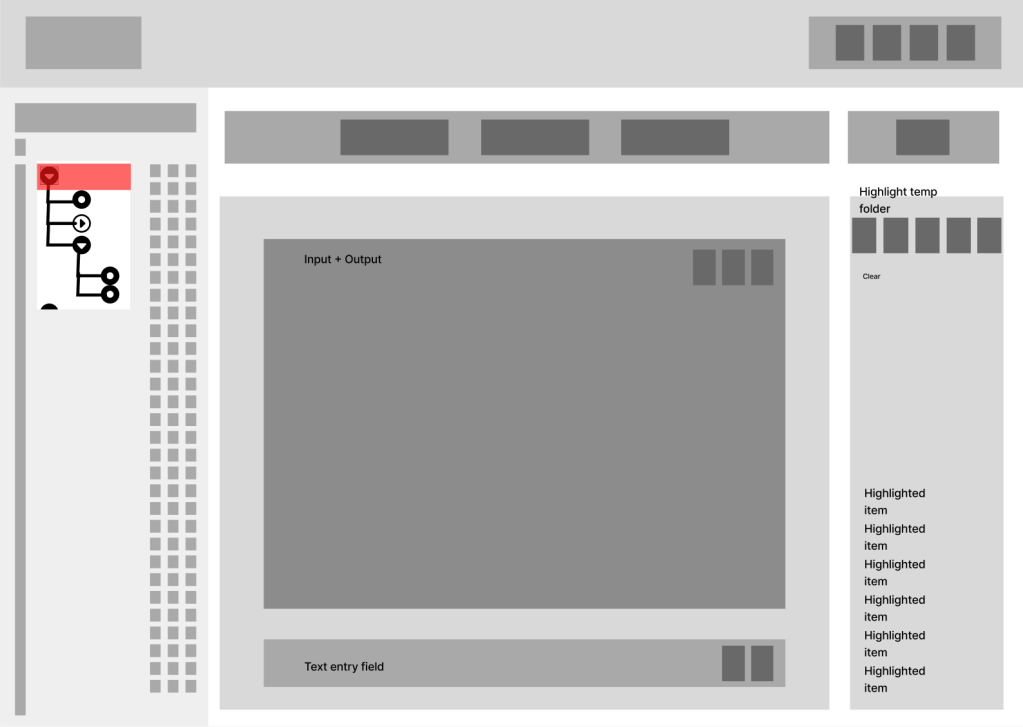

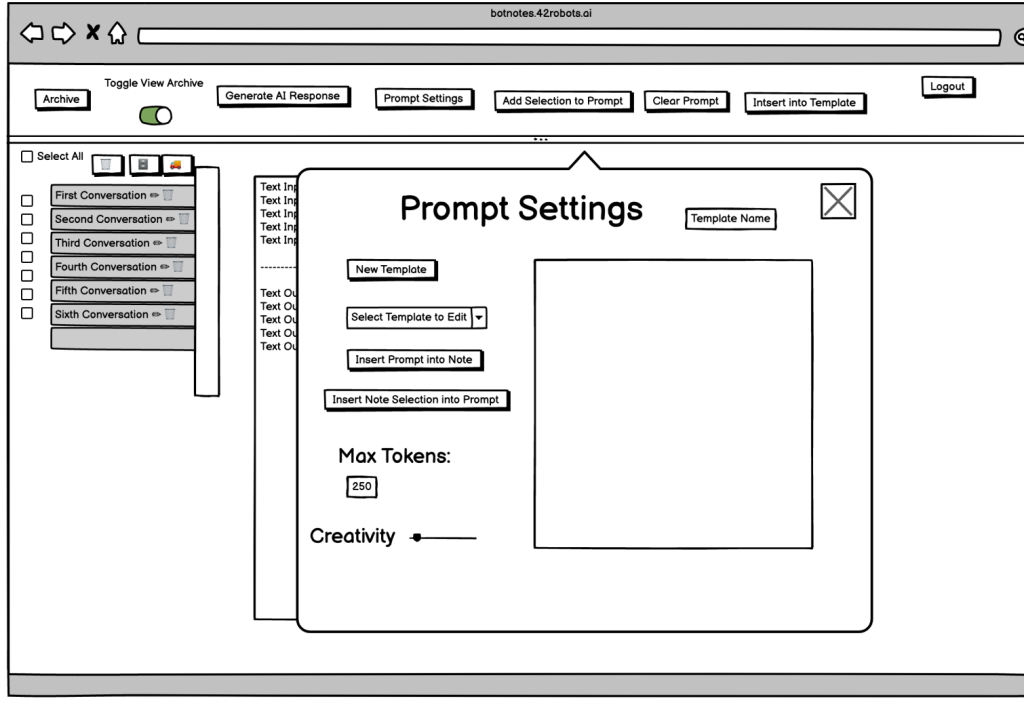

Design Evolution

From rough sketches to refined interface

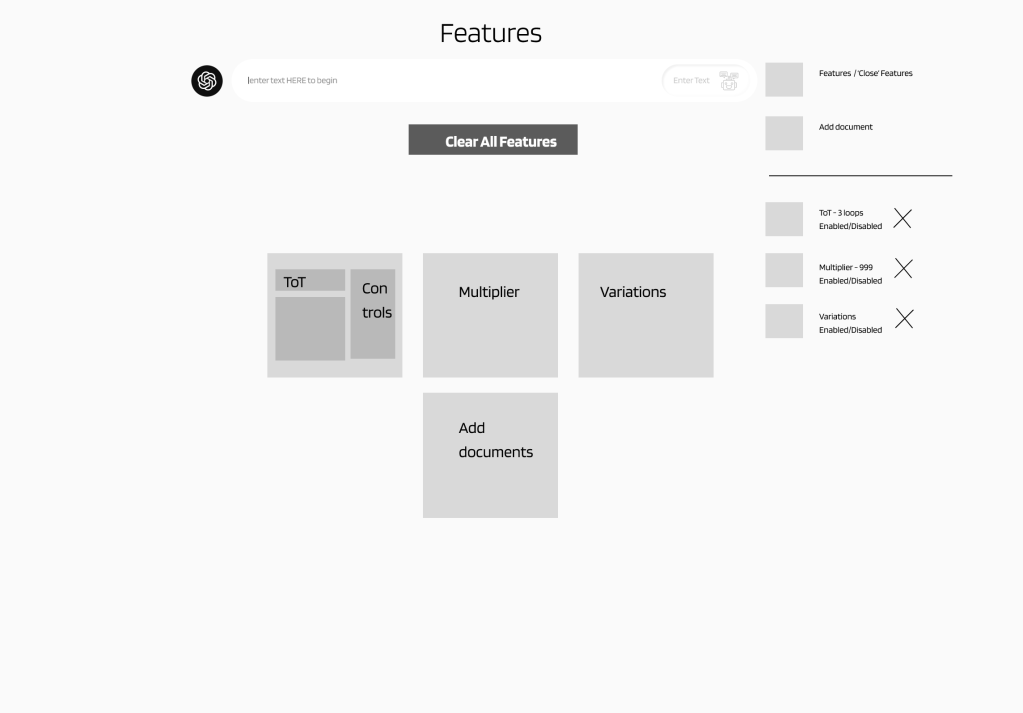

Wireframe Progression

The Roads Not Taken

Tool-First Navigation

Rejected: Users needed workflow-based navigation, not tool categories. This added cognitive load.

Dense Top Bar Layout

Rejected: A/B testing showed sidebar navigation performed 35% better for tool discovery and reduced clicks.

Key Design Decisions

-

Sidebar vs. Top Navigation

A/B testing revealed that sidebar navigation improved tool discoverability by 35% and reduced clicks to target tools. Users found it easier to scan vertically through tool categories.

-

Card-Based vs. List View

Card-based layouts tested better for tool browsing, providing visual hierarchy and quick scanning. List view reserved for detailed comparisons.

-

Unified Search Bar Placement

Prominent search placement at the top center became the primary entry point, enabling cross-tool search that users consistently requested.

Testing & Validation

Data-driven iteration and refinement

A/B Test: Navigation Pattern

Sidebar Navigation

- Avg. clicks to tool: 2.1 ↓35%

- Discovery rate: 84% ↑22%

- User satisfaction: 8.9/10 ↑31%

Top Bar Navigation

- Avg. clicks to tool: 3.2

- Discovery rate: 62%

- User satisfaction: 6.8/10

Result: Sidebar navigation improved discoverability by 35% and reduced clicks to target tools, validating our design direction for tool density and organization.

Note: Testing conducted with 10 participants (5 per variant). Results informed design direction but sample size limits statistical significance.

Iteration Timeline

Initial Prototype Testing

Tested with 5 users. Discovered navigation confusion and unclear tool categorization.

Key Change: Refined sidebar navigation for better tool density

Refined Navigation & Cards

A/B tested navigation patterns. Sidebar showed 35% improvement in discoverability.

Key Change: Enhanced card layout with better visual hierarchy

Final Polish & Validation

Final testing with 3 users. User satisfaction increased to 8.9/10. Ready for investor demo.

Key Change: Pricing clarity improvements based on feedback

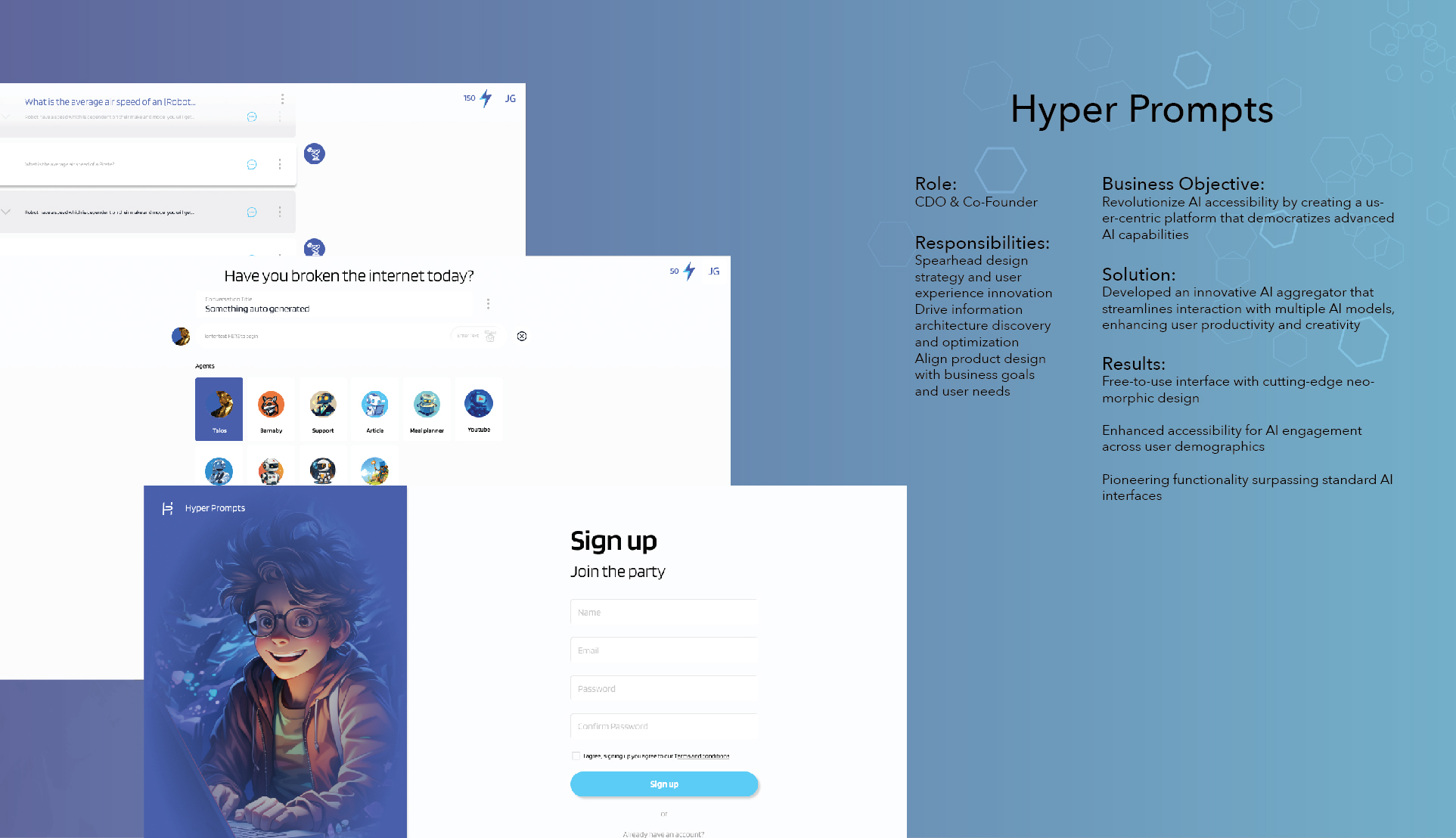

The Solution

A unified platform for AI discovery and use

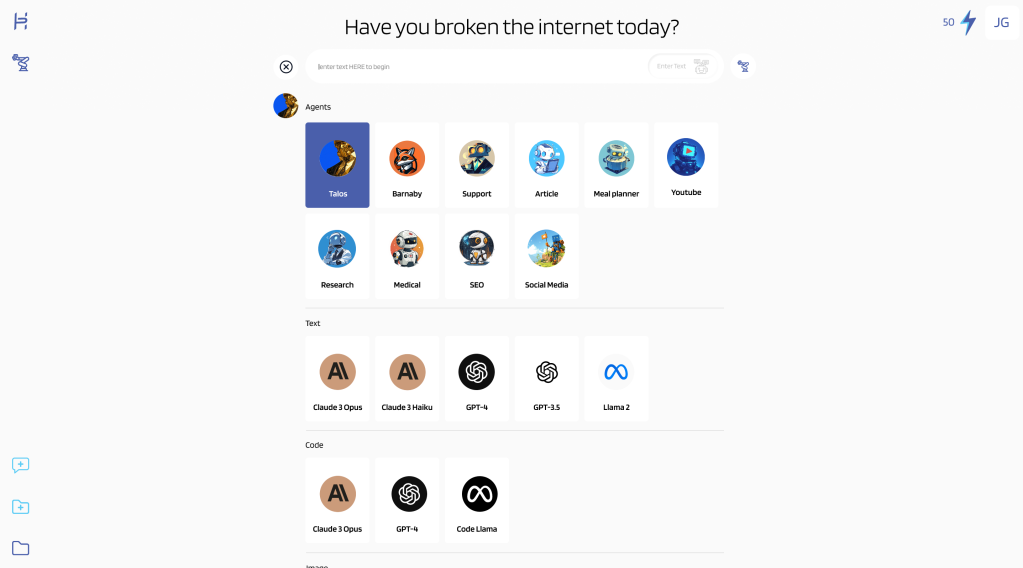

Hyper Prompts aggregates 30+ AI tools into a single, intuitive dashboard. Users can discover, test, and compare AI models without managing multiple subscriptions or switching contexts.

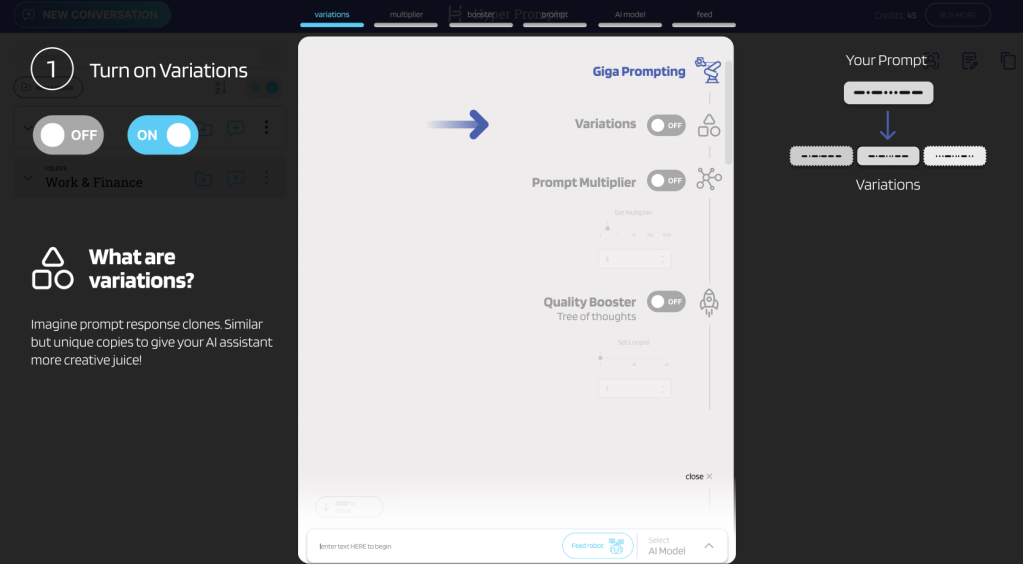

Key Features

Unified AI Library

Browse and filter through 30+ AI tools in a single interface. Smart categorization by use case (text, image, code, analysis) makes discovery effortless.

CORE FEATURE

Smart Comparison Tool

Compare outputs from multiple AI models side-by-side with the same prompt. Instantly identify which tool delivers the best results.

USER REQUESTED

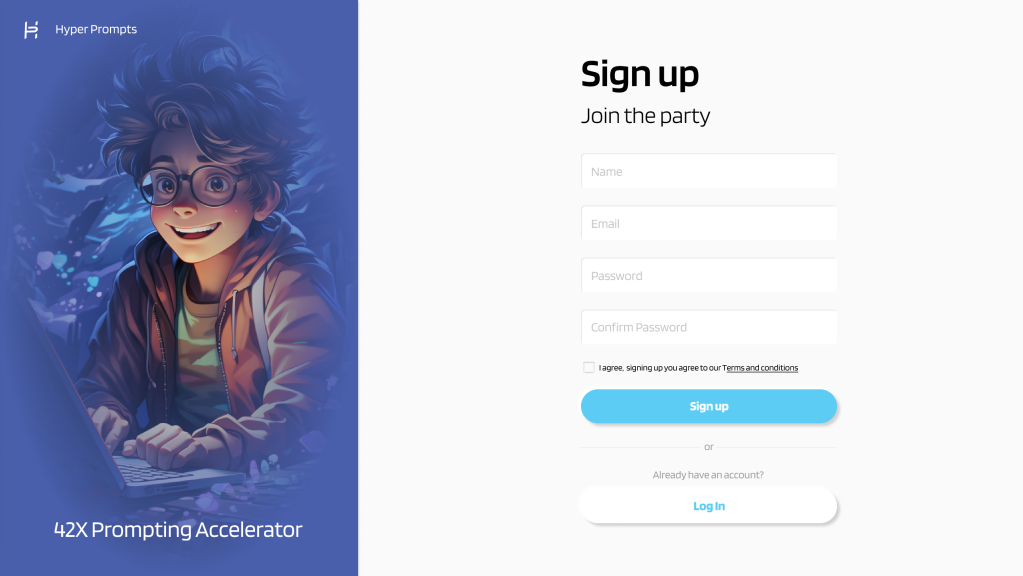

Frictionless Onboarding

Get started in seconds with a streamlined sign-up flow. No credit card required for free tier access.

CONVERSION

Flexible Pricing

Clear pricing tiers from free to enterprise, with transparent credit systems. Users know exactly what they're paying for.

MONETIZATIONAccessibility Features

-

Keyboard Navigation Full keyboard support for all interactions

-

Screen Reader Optimized Semantic HTML with ARIA labels

-

High Contrast Mode 4.5:1 contrast ratio minimum

-

Responsive Text Scaling Supports up to 200% zoom

Impact & Results

Measurable success and business validation

Before vs. After Launch

| Metric | Before | After | Change |

|---|---|---|---|

| Tool Discoverability | 62% | 84% | +35% |

| User Satisfaction | 6.8/10 | 8.9/10 | +31% |

| Product-Market Fit | Unvalidated | Validated |

Key Achievements

- Secured $250K in seed funding through compelling design presentation

- Generated $140K in white-label client contracts

- Validated product-market fit through data-driven testing

- Built scalable design system for rapid deployment

- Improved user satisfaction by 31% through iterative testing

- Delivered complete MVP within 7-month timeline

Reflection & Growth

Lessons learned and growth mindset

Key Learnings

Designing for investors is designing for users

The biggest insight was realizing that investor demos aren't just about aesthetics—they're about proving you understand your users deeply. Every design decision I presented to investors was rooted in research data and user pain points.

A/B testing saves months of debate

Rather than relying on opinions about navigation patterns, we ran A/B tests early. The data showed definitively that sidebar navigation outperformed top bar by 35%. Let the users decide.

Design systems aren't overhead—they're accelerators

Building a scalable design system upfront felt like it would slow us down, but it became our competitive advantage. When we landed white-label contracts, we could customize and deploy in days instead of weeks.

User interviews reveal what surveys cannot

The most valuable insights came from watching users struggle with existing AI tools during interviews. They couldn't articulate "I need unified search" in a survey, but watching them copy-paste between tabs made the problem crystal clear.

What I'd Do Differently

Integrate pricing experiments earlier

We waited until iteration 3 to test pricing clarity. Users were confused about tier differences earlier, and we could have validated pricing presentation alongside navigation patterns.

Document decision rationale in real-time

I kept mental notes about why we chose certain directions, but documenting them immediately would have saved time when stakeholders asked "why did we do it this way?" months later.

Involve engineering earlier in design system planning

While the design system was scalable visually, some component decisions created implementation challenges. Having engineering input during the design system phase would have resulted in components that were both beautiful and technically optimal.

Behind the Scenes

"The best design projects are the ones that humble you, challenge your assumptions, and force you to grow. Hyper Prompts did all three."

The Finished Product

A unified AI aggregation platform that secured $250K in seed funding